Generative Models

Generative Models

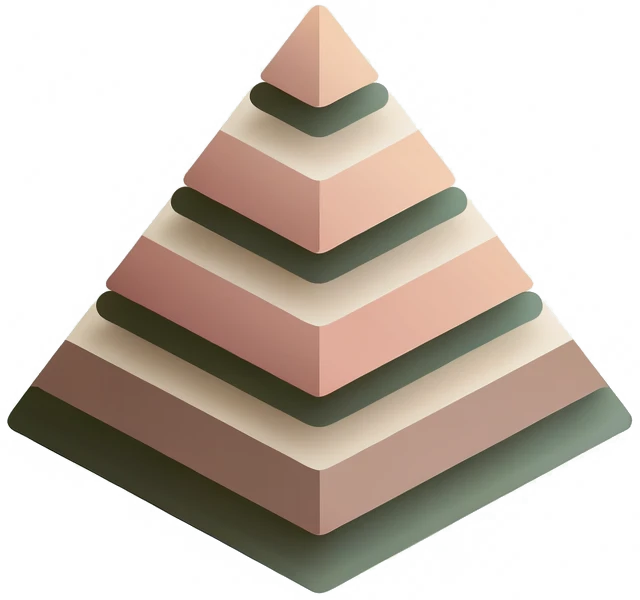

Diffusion models have shown remarkable results in generating 2D images and small-scale 3D objects. However, their application to the synthesis of large-scale 3D scenes has been rarely explored. This is mainly due to the inherent complexity and bulky size of 3D scenery data, particularly outdoor scenes, and the limited availability of comprehensive real-world datasets, which makes training a stable scene diffusion model challenging. In this work, we explore how to effectively generate large-scale 3D scenes using the coarse-to-fine paradigm. We introduce a framework, the Pyramid Discrete Diffusion model (PDD), which employs scale-varied diffusion models to progressively generate high-quality outdoor scenes. Experimental results of PDD demonstrate our successful exploration in generating 3D scenes both unconditionally and conditionally. We further showcase the data compatibility of the PDD model, due to its multi-scale architecture: a PDD model trained on one dataset can be easily fine-tuned with another dataset.

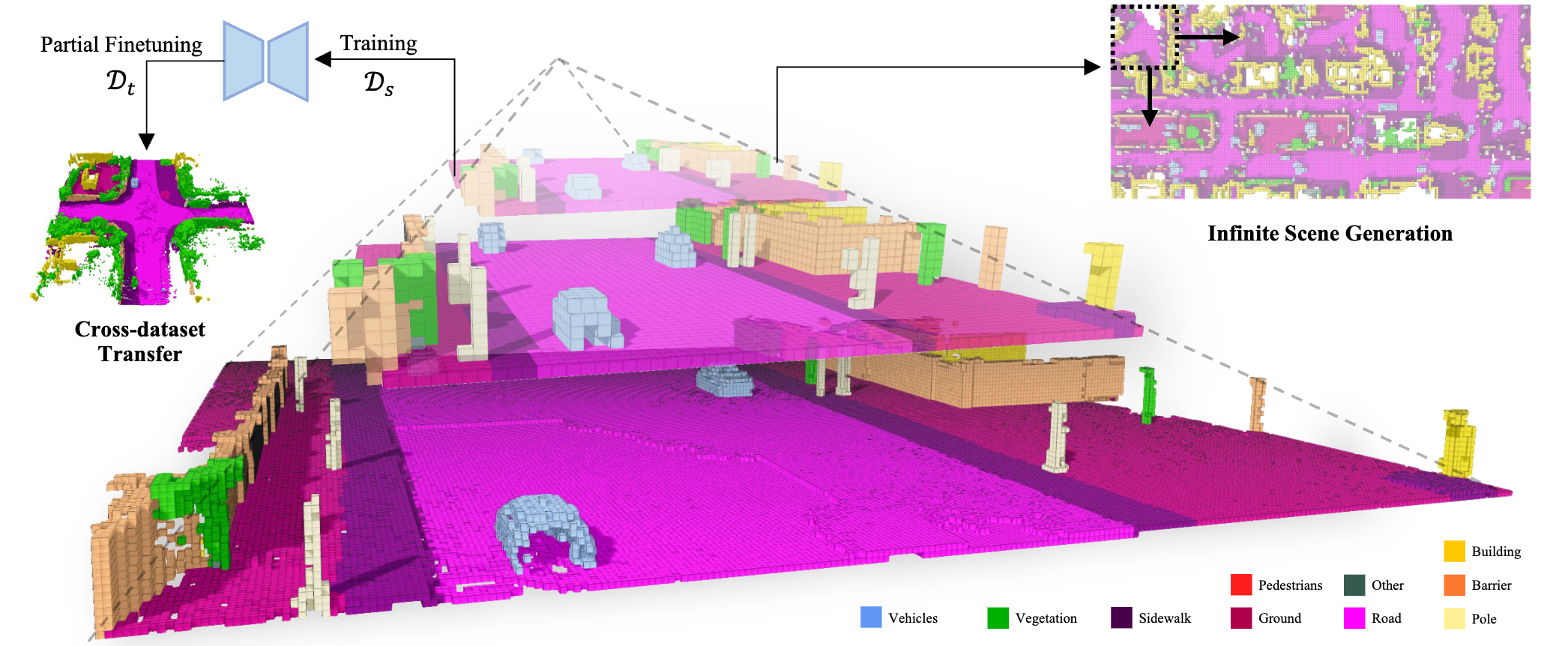

In our structure, there are three different scales. Scenes generated by a previous scale can serve as a condition for the current scale after processing through our scale adaptive function. Furthermore, for the final scale processing, the scene from the previous scale is subdivided into four sub-scenes. The final scene is reconstructed into a large scene using our Scene Subdivision module.

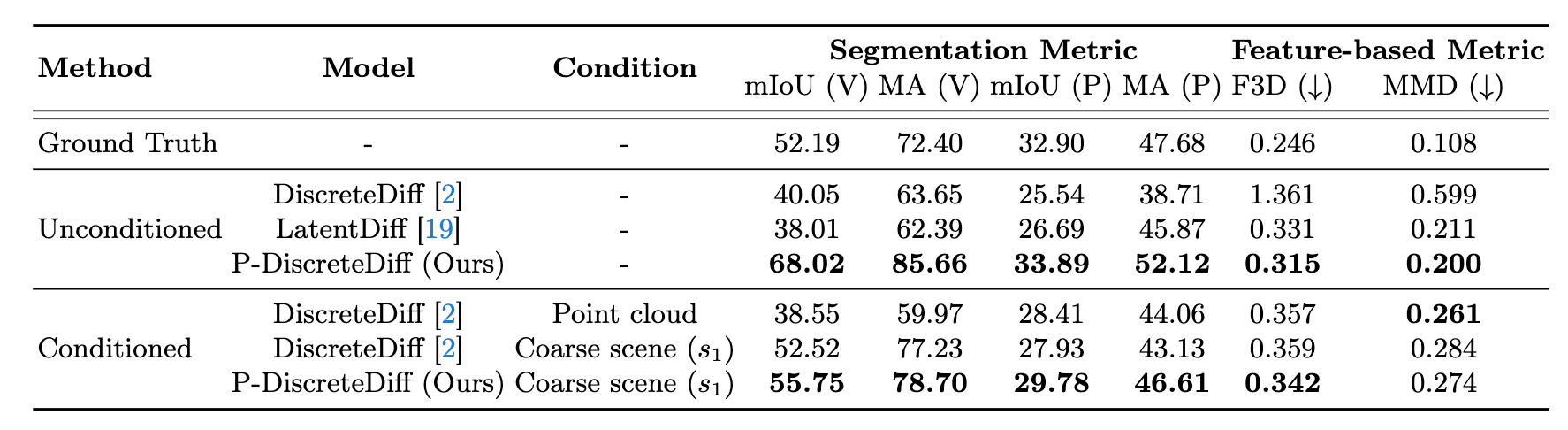

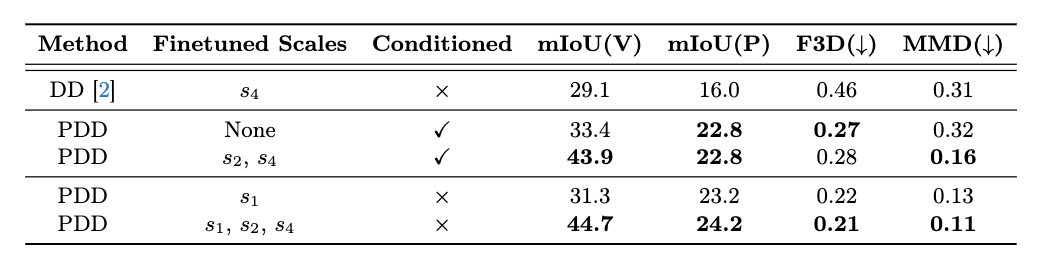

Table 1. Comparison of various diffusion models on 3D semantic scene generation of CarlaSC. DiscreteDiff, LatentDiff, and P-DiscreteDiff refer to the original discrete diffusion, latent discrete diffusion, and our approach, respectively. Conditioned models work based on the context of unlabeled point clouds or the coarse version of the ground truth scene. A higher Segmentation Metric value is better, indicating semantic consistency. A lower Feature-based Metric value is preferable, representing closer proximity to the original dataset. The brackets with V represent voxel-based network and P represent point-based network.

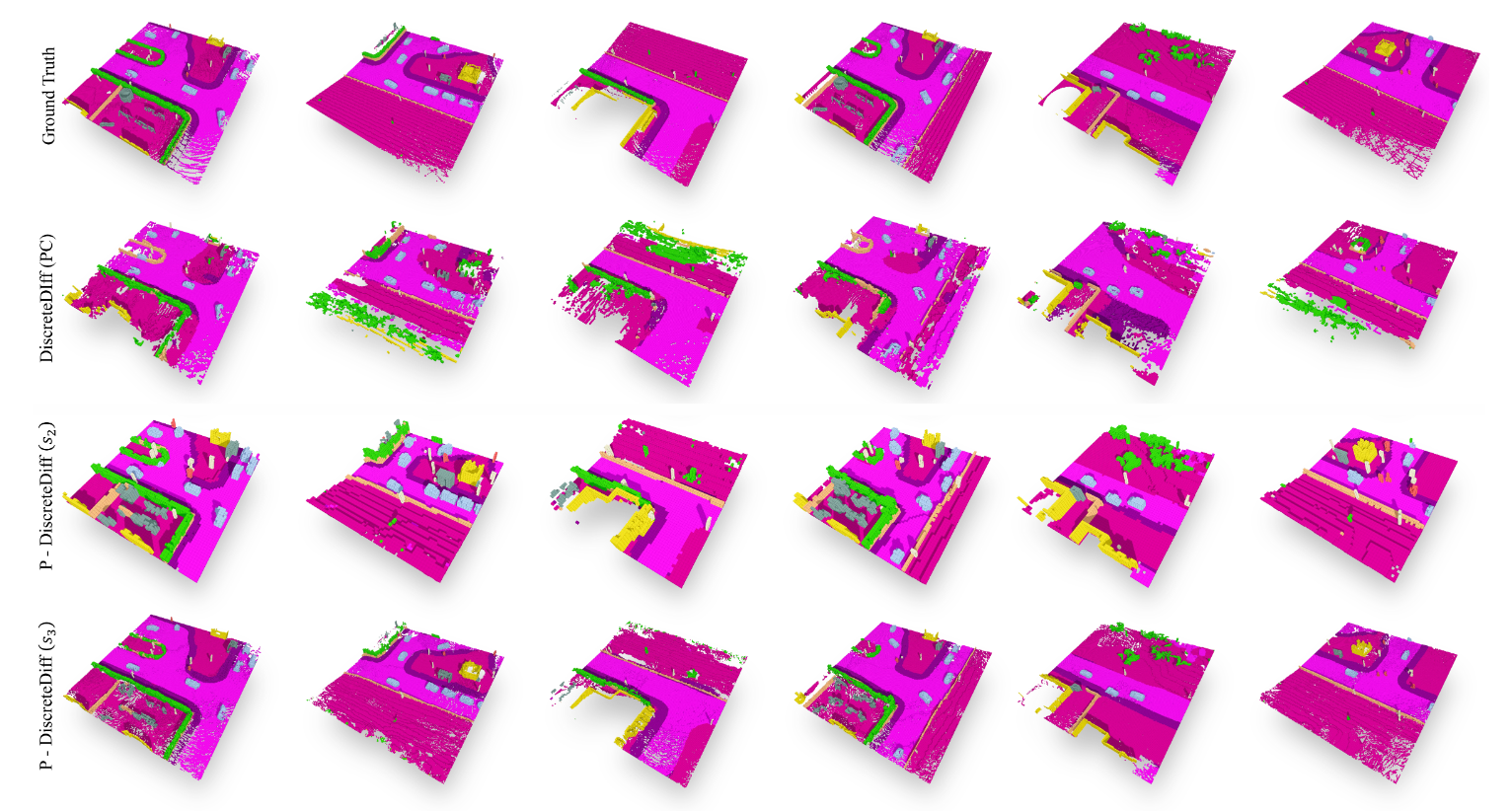

Figure 1. We compare with two baseline models – DiscreteDiff and LatentDiff and show synthesis from our models with different scales. Our method produces more diverse scenes compared to the baseline models. Furthermore, with more levels, our model can synthesize scenes with more intricate details.

We compare our approach with two baselines, the original Discrete Diffusion and the Latent Diffusion. The result reported in Table 1 demonstrates the notable performance of our method across all metrics in both unconditional and conditional settings in comparable computational resources with existing method. Our proposed method demonstrates a notable advantage in segmentation tasks, especially when it reaches around 70% mIoU for SparseUNet, which reflects its ability to generate scenes with accurate semantic coherence. We also provide visualizations of different model results in Figure 1, where the proposed method demonstrates better performance in detail generation and scene diversity for random 3D scene generations.

Figure 2. We conduct the comparison on conditioned 3D scene generation. We benchmark our method against the discrete diffusion conditioned on unlabeled point clouds and the same coarse scenes. Results in the figure present the impressive results of our conditional generation comparison. Despite the informative condition of the point cloud, our method can still outperform it.

Additionally, we conduct the comparison on conditioned 3D scene generation. We benchmark our method against the discrete diffusion conditioned on unlabeled point clouds and the same coarse scenes. Results in Table 1 and Figure 2 present the impressive results of our conditional generation comparison. It is also observed that the point cloud-based model can achieve decent performance on F3D and MMD, which could be caused by 3D point conditions providing more structural information about the scene than the coarse scene. Despite the informative condition of the point cloud, our method can still outperform it across most metrics.

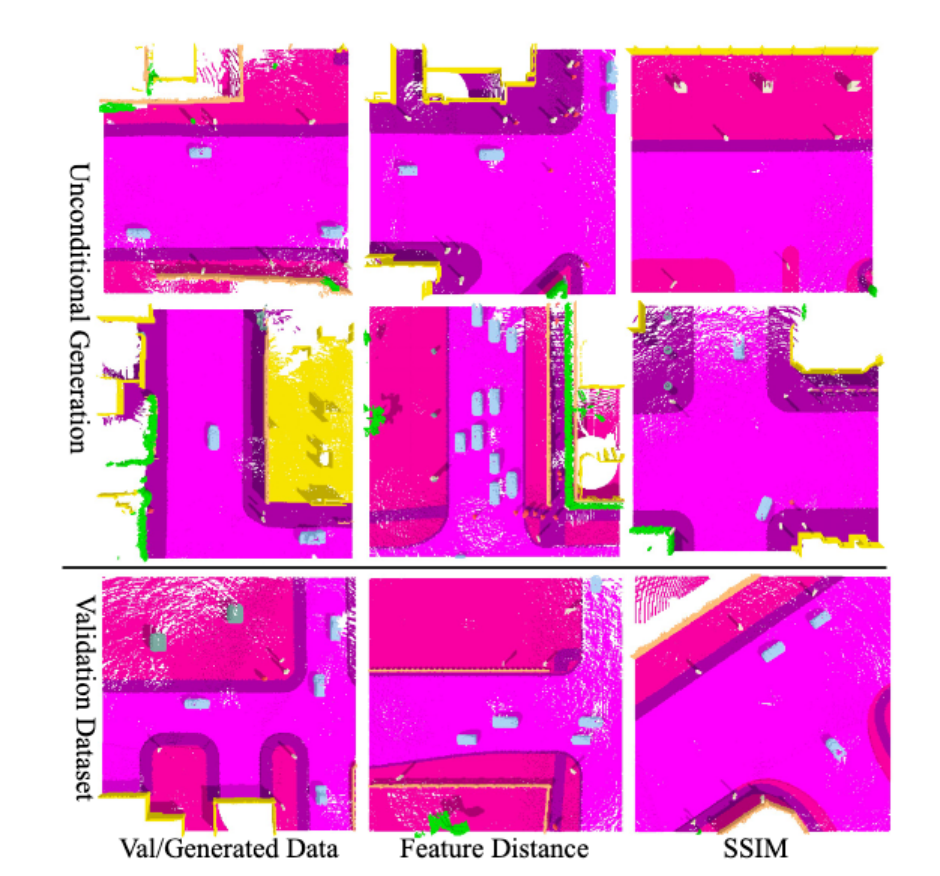

Figure 3. Data retrieval visualization. We generate 1k scenes using PDD on CarlaSC dataset, and retrieve the most similar scene in training set for each scene using SSIM (SSIM=1 means identical), and plot SSIM distribution. Scenes at various percentiles are displayed (red box: generated scenes; grey box: scenes in training set), those with the highest 10% similarity are very similar to the training set, but still not completely identical.

We use distribution plots, as shown in Figure 3, to validate the similarity between our generated scenes and the training set. We also display three pairs at different percentiles in this figure, showing that scenes with lower SSIM scores differ more from their nearest matches in the training set. This visual evidence reinforces that PDD effectively captures the distribution of the training set instead of merely memorizing it.

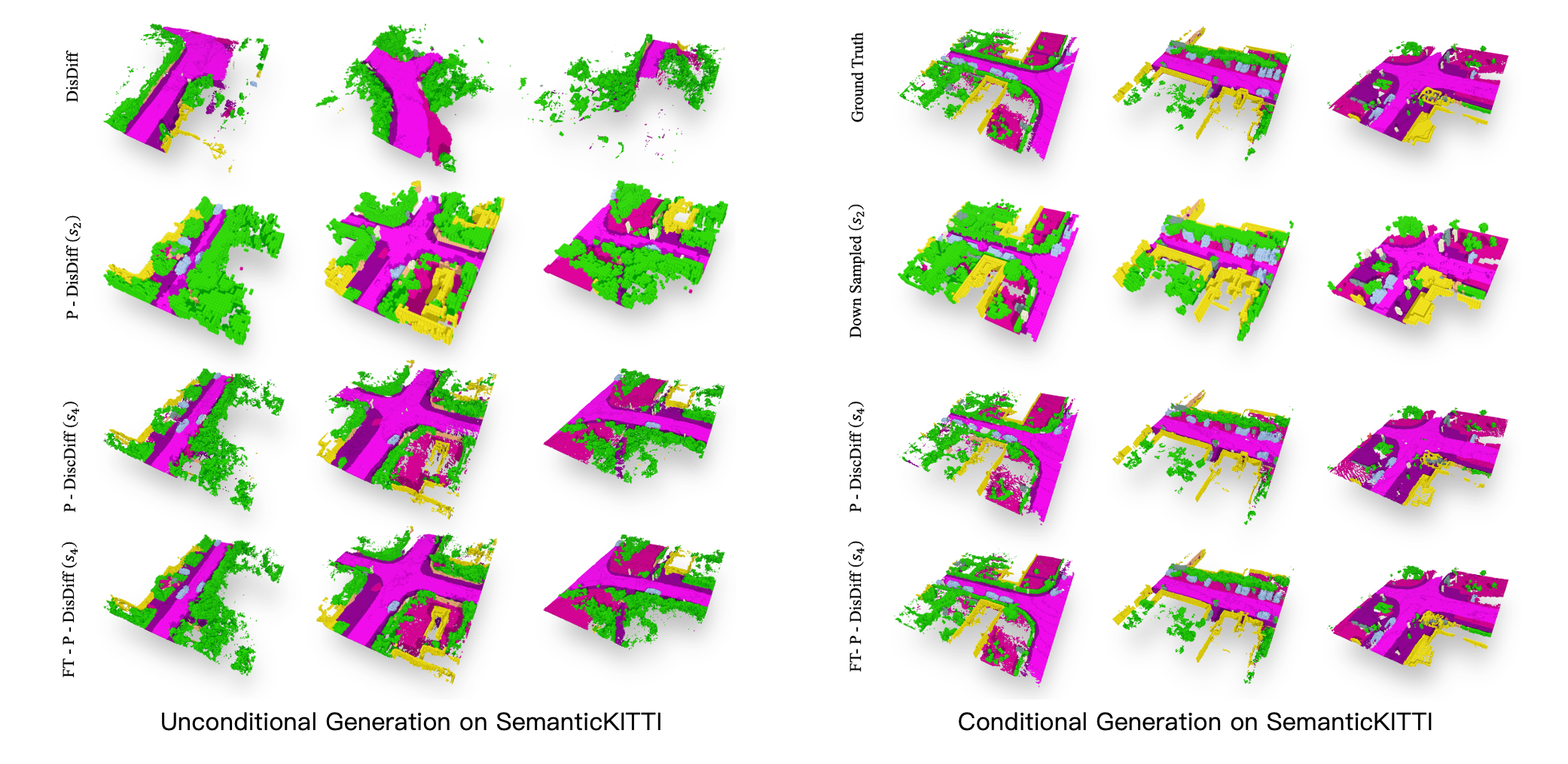

Figure 4. and 5. SemanticKITTI unconditional and conditional generation. FT stands for finetuning pre-trained model from CarlaSC.

Table 3. Generation results on SemanticKITTI. Setting Finetuned Scales to None stands for train-from-scratch and others stand for finetuning corresponding pre-trained CarlaSC model.

Figure 4 and Figure 5 showcase our model's performance on the transferred dataset from CarlaSC to SemanticKITTI for both unconditional and conditional scene generation. The Pyramid Discrete Diffusion model shows enhanced quality in scene generation after finetuning with SemanticKITTI data, as indicated by the improved mIoU, F3D, and MMD metrics in Table 3. The fine-tuning process effectively adapts the model to the dataset's complex object distributions and scene dynamics, resulting in improved results for both generation scenarios. We also highlight that, despite the higher training effort of the Discrete Diffusion (DD) approach, our method outperforms DD even without fine-tuning, simply by using coarse scenes from SemanticKITTI. This demonstrates the strong cross-data transfer capability of our approach.

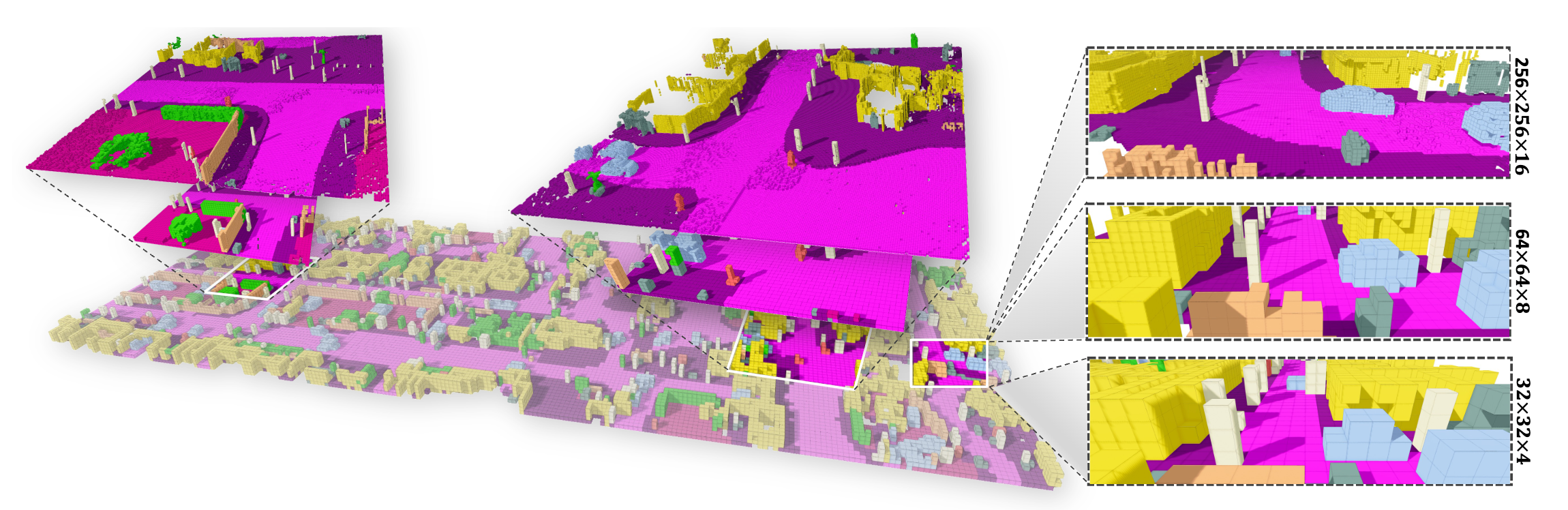

Figure 6. Infinite Scene Generation. Thanks to the pyramid representation, PDD can be readily applied for unbounded scene generation. This involves the initial efficient synthesis of a large-scale coarse 3D scene, followed by subsequent refinement at higher levels.

Figure 6 visualizes the process of generating large-scale infinite scenes using our PDD model. We first use the small scale model to swiftly generate a coarse infinite 3D scene, as shown at the bottom level in Figure 6. Subsequently, we leverage models at larger scales to progressively add in more intricate details in the scene (see the middle and top level in Figure 6) to improve its realism. As a result, our model produces high-quality continuous cityscapes without relying on additional inputs. This substantially reduces the limitations of conventional datasets where only finite scenes are available, paving the path to provide data for downstream tasks such as 3D scene segmentation.

The models are large and may cause device lag or overheating. Please confirm before enabling the display.

Due to the high computational resources required for 3D rendering, please preview the models on a desktop or a high-performance laptop.

32x32x4

64x64x8

256x256x16

@article{liu2024pyramiddiffusionfine3d,

title={Pyramid Diffusion for Fine 3D Large Scene Generation},

author={Yuheng Liu and Xinke Li and Xueting Li and Lu Qi and Chongshou Li and Ming-Hsuan Yang},

year={2024},

booktitle={arXiv preprint arXiv:2311.12085}

}